Beyond Chatbots: The Shift to Agentic AI

Effective delegation is key.

You may have heard some talk lately about AI Agents or Agentic AI.

Don’t worry--no robot Maxwell Smart is joining the county Sheriff’s Department. AI Agents are systems that autonomously take on and complete tasks using automation and generative AI (GenAI). These Agents can be native to an AI chatbot like ChatGPT or they can be purpose built using tools like Zapier or n8n.

This is a longer post, but my goals are simple: help you understand AI agents, share a demo you can try, and explain why careful deployment of AI agents inside an organization may be the quickest—and most reliable—way for businesses to benefit from Generative AI.

Unconstrained AI Agents

AI agents are often pitched as personal assistants that can handle multi-step work without supervision. That would be great—but, as with human assistants, good results require good delegation. If you don’t spell out how you want the job done, the agent may choose an approach you didn’t intend. And even more odd is that if you give an agent the same task again, it might pick a different way to complete the task each time.

For example, during the past couple of months, in my talks, I’ve asked ChatGPT to predict the Super Bowl winner as a demonstration. Here is my prompt:

Please gather all the data you can about which team will win the super bowl. Please put any data you collect in a csv file. and then make a prediction for me.

Each time I use the same prompt—days or weeks apart—I get a different approach. The prediction changes too, which is expected as the season progresses so it is hard to determine if the approach to this task is influencing the prediction.

The first time, it browsed each team’s website, compiled every stat it could find into a spreadsheet, wrote a Python script to analyze the collected data, and then made a prediction—the KC Chiefs.

Other times, it pulled together pundit forecasts on likely matchups and chances of winning, ran a similar analysis, and made a prediction—again the KC Chiefs.

And other times, it pulled data from a single source, ran an analysis, and made a prediction—the Buffalo Bills. (Sorry Randy S.)

This is a little unsettling as one expects computers to be consistent in their operations. But I never told the ChatGPT Agent how to tackle the problem, which sources to use, what data mattered, or what to ignore. So AI Agents, while useful, put us back in the same boat as AI chatbots: we have to train everyone in our organization to use them effectively by giving good prompts and the output has to be inspected and double checked. And the likelihood that all the people in an organization can delegate well to an AI Agent seems small to me…

Note: While AI companies like to say that AI Agents “reason,” that is a misnomer, as these systems don’t reason like you or I do. And if they are given too little supervision, they can go off the rails. See AI’s Wrong Answers Are Bad. Its Wrong Reasoning Is Worse from ieee, published Dec 2, 2025.

Constrained AI Agents

A better way to use agents is to have a small team familiar with the process to be “Agentized” get together with IT staff to design an agent with clear instructions inside a shared system—using tools like Zapier (paid service) or n8n (open source, bring your own hosting)—where you predefine the tools and automation steps, and use a large language model like ChatGPT only when needed. Best of all, you can let people trigger these agents with a simple email (or web form)—just like delegating to a colleague.

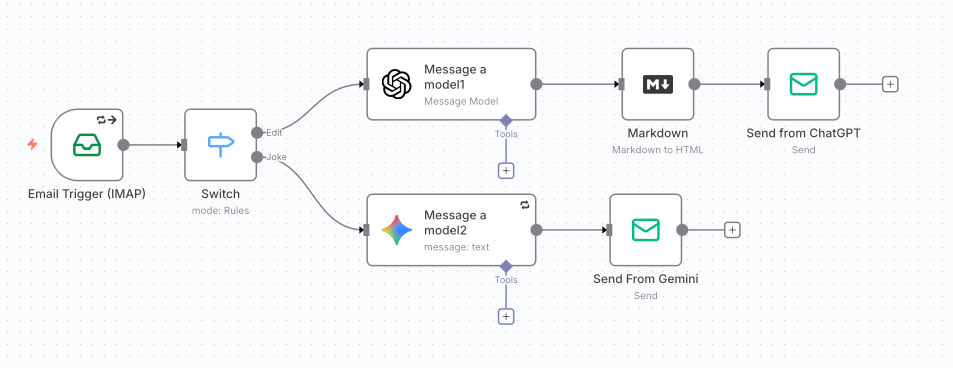

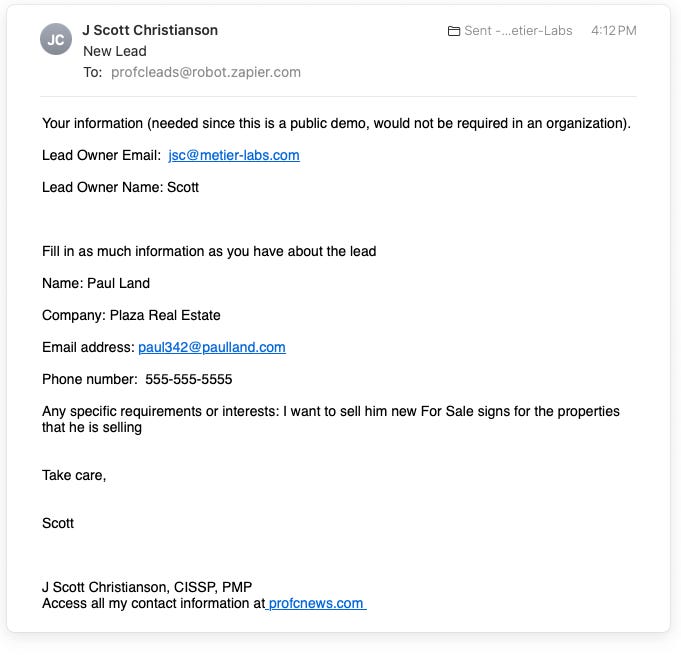

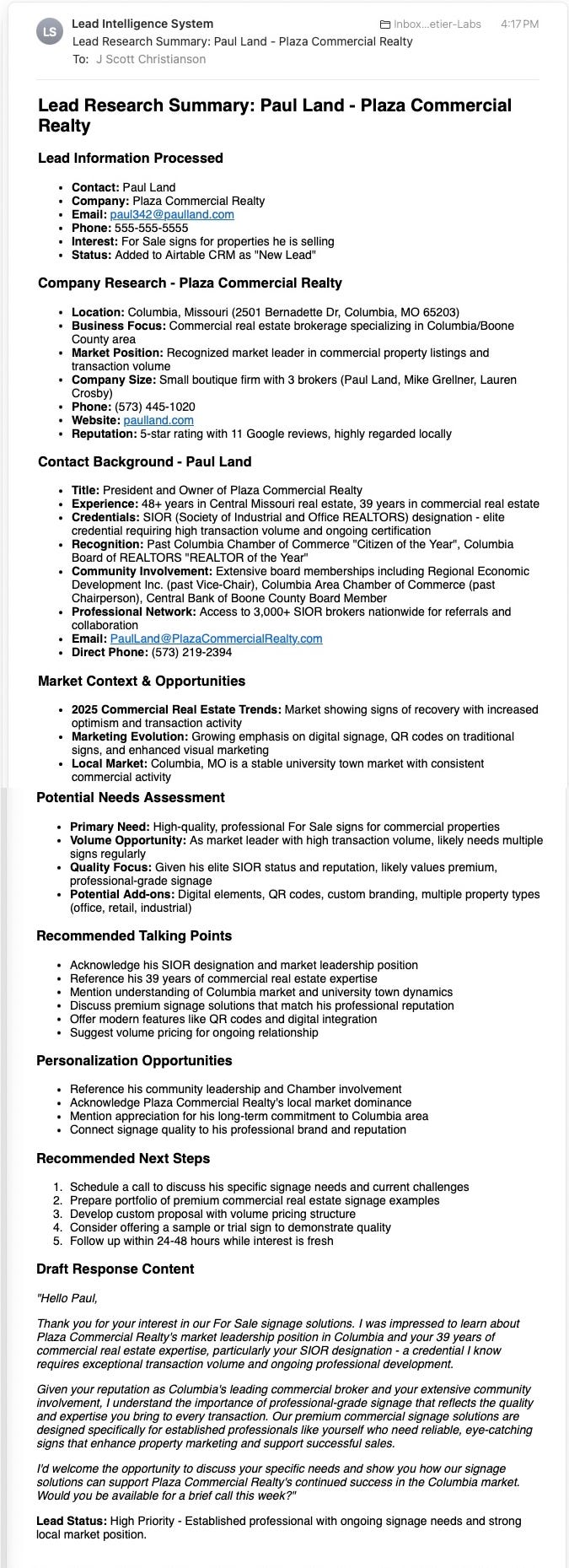

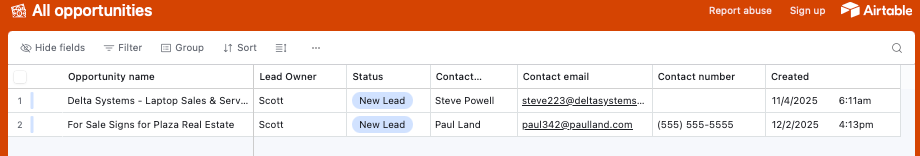

For example, you could email an AI agent the contact information for a new lead. The agent could add the lead to your CRM, research the lead, then reply with a concise summary and a draft email for you to review and send. That is exactly what the agent shown below (built in Zapier) does.

Here is a message sent to this agent:

And here is the response:

It also added the lead to a database of potential customers, just based on the email.

After multiple runs, several issues surfaced—some from my Zapier inexperience, others from the nature of LLMs, and a few from both. About half the time, the lead and lead-owner emails get swapped, so the reply goes to the lead—likely a Zapier design issue on my end. When a company name has a better-known twin (e.g., Delta Systems the manufacturer vs. a local software firm), the agent muddles the company’s mission. Both problems are fixable: give the LLM clearer instructions and include more context in the initial email.

For this project, I used Zapier’s Agent builder named CoPilot—an LLM trained to create agents in Zapier—to create the Agent. Seems odd to use an AI to create another AI system, but it is actually more common than you know. After a brief back-and-forth, my agent was built in eight minutes or less. Even better, Zapier offers templates you can copy and paste directly into the agent interface.

An AI Agent for you to try

A couple of posts ago, I shared my prompt for editing this newsletter one paragraph at a time. At the time, only ChatGPT’s paid users could access my “CustomGPT” to use my prompt quickly. Using the n8n agent buider, I built an agent with the same instructions. To use the Agent, email it at process@christiansonjs.com with the subject Edit, paste the text you want revised into the body, and hit send—it will email you back. Try it now if you want.

Building this agent was as simple as dragging and dropping the steps, adding credentials, and testing (BTW, like Zapier, n8n offers templates you can copy and paste directly into the agent interface). OK, perhaps I also needed to chat with my former student Issac H when I got confused. 🤣

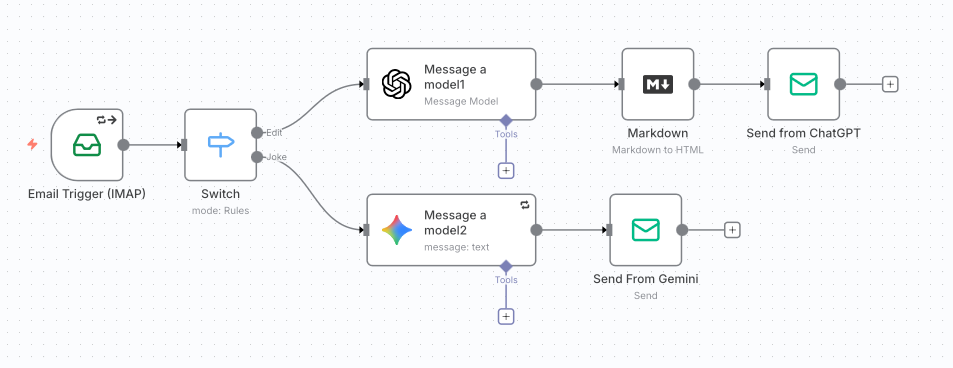

I wanted different actions to be performed by this agent based on the email subject, so I added an if module. Messages are routed to OpenAI/ChatGPT for editing when the subject is Edit and to a Google Gemini AI when the subject is Joke. I configured the “Message a model” nodes accordingly, then added the final steps to email the response back.

If you want a “dad joke,” you just send an email with the subject of “Joke” and my agent will email you back a “safe for work” joke based on the first word in the body of your email. Try it here.

There, in a matter of about 30 minutes of work, I have now deployed my AI Agent to the approximately 4.59 billion people who have email. It is also available to other bots, but let’s not go down that road…

Emailing AI Agents is the future of work (?)

With the right people involved, most medium-to-large businesses can quickly build an army of agents to automate processes that require research or the generation of new text, images, or even video.

AI agents can be simple or complex, and can include easy ways for humans to double-check the agent’s work before it reaches a customer. In fact, having customer facing agents is probably a bad idea, see the post below from Kai Wang.

What I like best, though, is how purpose build agents make AI easy for end users—and deliver consistent results across an organization.

Since GenAI has entered our work and personal lives, getting good results has meant learning ever-more techniques for prompt engineering. But is it realistic to expect every employee to keep up with the latest prompting tricks, choose the right platform and model, and evaluate the output’s quality? I don’t think so. But they do know how to use email.

Once built and tested, an AI Agent can be rolled out organization-wide via a dedicated email address—users just forward the required information and consistent results are returned. If the model or platform changes, IT updates the agent behind the scenes and no one notices (switching from OpenAI to Google Gemini is seamless from the users perspective). This allows organizations to scale generative AI to hundreds or thousands of users using a tool they already know—email.

It may sound odd to call email the interface of the future, but there’s a reason it endures: it’s platform-agnostic, simple to use, an open standard not tied to any one company and usable across a wide range of hardware.

Another huge plus of this approach to deploying AI is that it lets organizations put guardrails on GenAI use. Take away access to Generic tools like ChatGPT which are notorious for leading users down rabbit holes and producing sloppy work, and update everyone’s email address book with purpose build AI Agents, that do only those things the organization has deemed are appropriate for AI use.

Most businesses already have the ingredients to use AI agents--it’s a matter of identifying and prioritizing workflows to offload. The real payoff is freeing people from routine digital drudgery so they can focus things AI can’t do: creativity, service, and strategy. The best agents will act like reliable digital colleagues, handling entry-level tasks independently.

Book Me for Your Next Conference or Event

After nearly two decades teaching and building innovative programs at the University of Missouri, I’m now focusing more of my time on keynote speaking and workshops—helping organizations make sense of the emerging technologies reshaping work, learning, and leadership.

If your organization, conference, or leadership team is exploring how to navigate AI and emerging technologies responsibly—and productively—I’d love to talk.

For more details, topics, and booking information click here.

👍 Products I Recommend

Products a card game for workshop ideation and ice breakers (affiliate link). I use this in my workshops and classes regularly. Made by a former Mizzou student Aaron H.

📆 Upcoming Talks/Classes

I will be presenting a “Lunch and Learn” at the Daniel Boone Library in collaboration with the League of Women Voters on “AI and Democracy — Guardrails We Can Choose” on December 10th at Noon. Details here.

I will be teaching a shortcourse “Thinking Machines, Changing Minds: How AI Is Shaping Work and Wisdom” for Osher about AI during the Winter 2026 semester. Details and registration will available here soon.